As the pharmaceutical industry shifts from isolated pilot initiatives to full-scale deployment, a sobering truth emerges: integrating artificial intelligence (AI) into existing quality management systems (QMS) is anything but simple.

In our previous blogpost, we explored the transformative potential of AI in reshaping pharma QMS, enhancing decision-making through data-driven insights, streamlining quality control and documentation and enabling predictive quality assurance and event oversight. This technological shift promises greater efficiency and agility, which many organisations are eager to embrace.

Yet before diving into the how, one critical question must be addressed:

What’s standing in the way of AI adoption in pharma QMS?

This second blogpost in our series aims to answer that question. As most companies move toward AI integration, our mission is to equip pharma leaders with the insights needed to adopt AI with confidence. In this post we explore common obstacles in pharmaceutical QMS and uncover the hidden pitfalls in AI, paving the way for a seamless, forward-thinking and successful implementation.

4 key barriers

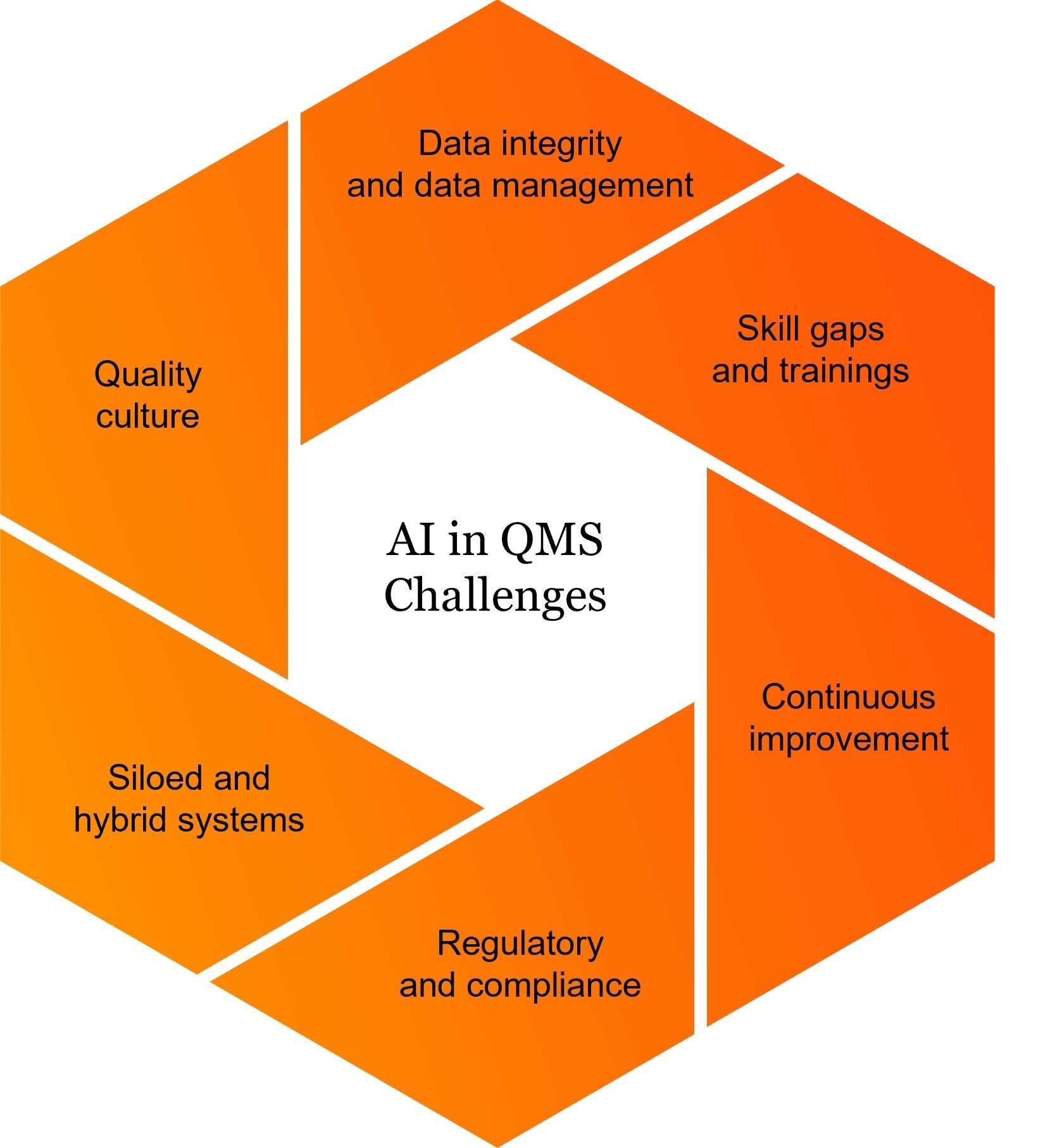

To better understand the roadblocks to successful AI integration, we have identified four key areas where challenges most commonly arise: data-related limitations, technical and systems constraints, regulatory and compliance complexities, and workforce and organisational readiness. Each of these areas presents distinct barriers that, if not addressed proactively, can significantly slow down or even derail AI adoption efforts.

1 Data-related

The effectiveness of AI systems relies heavily on the quality, consistency and availability of data. Without reliable input, these systems cannot deliver credible insights or drive meaningful improvements. Many organisations must first assess their internal processes before moving toward AI adoption.

1.1. Data quality and consistency

One of the major concerns lies within the rigidity of the regulatory infrastructure, which often lacks the flexibility to support modern data practices. Adding to this issue is the limited standardisation and digitalisation of data across the industry, as many companies still rely on unstructured sources such as scanned documents or handwritten records. The performance of AI systems is also driven by the volume and consistency of available data, which must be robust enough to fuel their operation. Inadequate data quality not only limits system functionality but can also introduce algorithmic bias, resulting in distorted outcomes that may compromise decision-making and pose risks to patient safety. Addressing these core issues is critical for any organisation seeking to implement AI responsibly and effectively.

1.2. Data silos

Many companies face persistent challenges in promoting cross-functional collaboration due to limited internal connectivity between departments. These same structural issues often resurface when integrating AI into QMS. Among the most critical is the fragmented nature of data systems, which frequently lack interoperability, rely on rigid architectures or operate in isolation. Since AI models require unified and well-integrated datasets to generate reliable outputs, such fragmentation hinders data flow and real-time analytics. Breaking down these silos is imperative to enable seamless end-to-end product lifecycle management, from drug development and clinical trial optimisation to enhanced pharmacovigilance.

2 Technical and systems

While systems integration is a key challenge in this context, many organisations continue to rely on legacy QMS platforms that are not compatible with modern AI tools. Additional technical concerns significantly complicate AI adoption, especially in areas concerning data safety, security and overall system resilience.

2.1. Cybersecurity and privacy

AI has the potential to significantly enhance QMS security, but poor integration can introduce serious vulnerabilities. In the pharmaceutical industry, deploying AI often involves processing vast datasets containing sensitive information about patients, customers, suppliers or employees. This raises substantial data privacy concerns that can violate regulatory concerns, such as the risk of re-identification of anonymised data. AI systems may be targeted by malicious actors, leading to cybersecurity threats such as corrupted outputs or breaches within legacy QMS environments. If left unaddressed, these risks could compromise both patient safety and regulatory compliance.

3 Regulatory and compliance

In the pharmaceutical sector, regulatory compliance goes far beyond a procedural formality, it a strategic necessity. As AI technologies become increasingly embedded in QMS, companies must remain vigilant to a growing and complex regulatory landscape that extends beyond traditional frameworks like GxP standards and good automated manufacturing practice (GAMP).

3.1. Evolving regulations

Implementing AI within QMS has evolved from a digital innovation into a regulated practice. To be deployed effectively, organisations must ensure that their AI tools not only meet technical performance benchmarks but also adhere to ethical and legal expectations. In today’s regulatory climate, maintaining ongoing compliance demands the agility and foresight to stay ahead of emerging AI-specific frameworks. New and evolving guidelines, such as the EU AI Act, the Annex 22, the US Food and Drug Administration (FDA) Guidance and the EMA AI Workplan, are reshaping expectations around transparency, risk management and ethical use.

3.2 Validation

AI models must not only comply with emerging regulations but also meet rigorous standards for explainability and validation, adding a significant layer of complexity. This introduces a demanding set of expectations which is more than a technical challenge, including model transparency, lifecycle monitoring and human oversight. Achieving this level of compliance calls for a substantial investment and an organisational shift in data infrastructure, continuous validation of protocols and ongoing compliance monitoring.

4 Workforce and organisational

Integrating AI into exiting QMS goes beyond solving technical hurdles, it also presents a significant human dimension. Once the technology is in place, the true challenge becomes navigating employee resistance and embracing acceptance of these tools.

4.1. Skills gaps and change management

The significant cultural transition required for adopting AI and data science within QMS is often underestimated. This transformation demands a unique blend of technical proficiency and domain-specific knowledge, which can be daunting for those unfamiliar with emerging technologies. As previously noted, moving away from traditional operations to automated platforms may result in a disconnect in implementation. A major obstacle in implementing this change is the shift in mindset, especially when innovative strategies such as proactive quality management are perceived as disruptive. Ensuring organisational readiness is critical to prevent confusion and pushback among stakeholders.

The strategic shift to AI-driven QMS

As emphasised in this post, adopting AI within an existing QMS goes beyond a mere technical enhancement, it represents a significant strategic shift. Although only four primary barriers to implementation are discussed here, it’s essential to understand that these are not exhaustive. Their relevance and impact can vary widely depending on each organisation’s structure and approach to emerging technologies. For example, financial and strategic challenges were not explicitly listed among the four key barriers but play a drastic role in shaping both the feasibility and the pace of AI integration.

Acknowledging these multifaceted challenges presents an opportunity to strengthen an organisation’s foundation for innovation and all actors seeking to engage with this change must consider the following:

Objectives

Scalability and flexibility

Ethical and legal considerations

Continuous monitoring and evaluation

Collaboration between humans and AI

We wrap up this blogpost with a clear takeaway: the conversation around AI in QMS has moved beyond if to how. Stay tuned, as we’ll continue exploring this topic in our next post which will guide you toward a smooth transition into the future of AI-driven QMS.

Want to learn more about AI in regulated environments?

Check out our first blog post where we explore the exciting opportunities AI offers in regulated environments.

Authors

Contact us